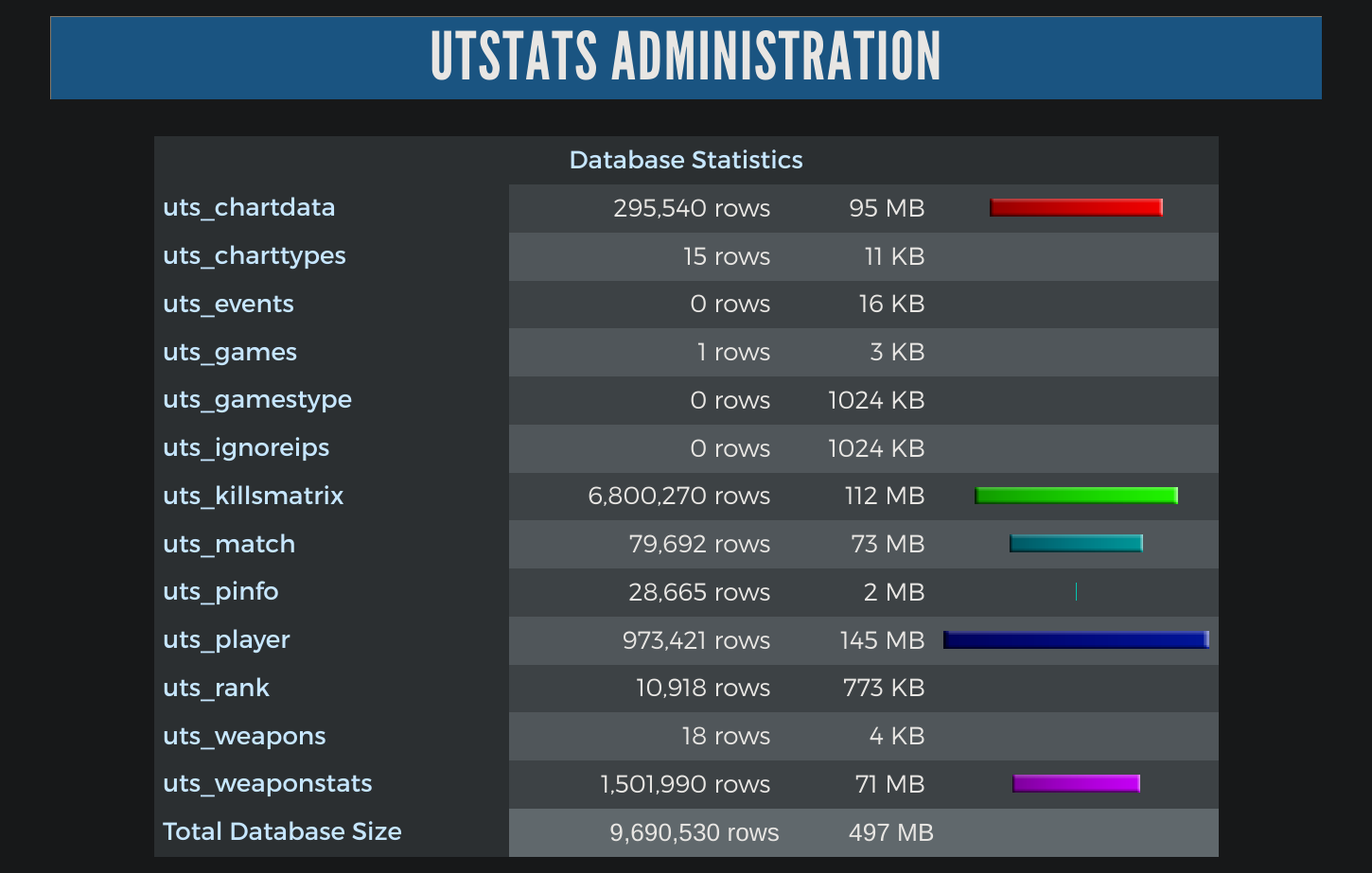

I took a look at the code, and yes, to say the least, recalculating player stats is terrible. Can you please run the SQL? It will show you how many players statistics should be generated for.

SELECT count(*) as cnt FROM

(

SELECT

p.id,

p.matchid,

p.pid,

p.gid,

m.gamename

FROM uts_player p,

uts_pinfo pi,

uts_match m

WHERE pi.id = p.pid

AND pi.banned <> 'Y'

AND m.id = p.matchid

-- ORDER BY p.matchid ASC, p.playerid ASC

)

PS: I don't understand why the original select contains an ORDER BY statement. It shouldn't really matter which player or match the ranking is based on. But perhaps there's a deeper meaning to it that I'm not currently seeing.

A comprehensive ranking calculation is then created for each result row. You can see this in the while loop in admin/recalcranking.php:

while( ...) {

...

include('import/import_ranking.php');

}

WOW! I can well imagine this getting out of hand.

PS: The include within the while loop is terrible, by the way. I think this whole thing could be solved today with the PHP option of snippets, no, it's called traits.

Suggestion: You could probably speed up the ranking by restricting the ranking to "active" players. For example, by only considering matches from the last 90, 180, or 365 days. It would then look something like this:

SELECT

p.id,

p.matchid,

p.pid,

p.gid,

m.gamename

FROM uts_player p,

uts_pinfo pi,

uts_match m

WHERE pi.id = p.pid

AND pi.banned <> 'Y'

AND m.id = p.matchid

AND m.time < _______ /* <=== NEW! */

ORDER BY p.matchid ASC, p.playerid ASC

You'll have to take a look at what the "time" column looks like in the database (it's defined as varchar(14), so you don't specify time values!!).

Other aspects that caught my attention:

The colleagues who wrote the tool are familiar with UT and statistics, as you can see. But they have no idea about databases.

In admin/check.php, most CREATE TABLE scripts are coded with "CREATE TABLE uts...". Only one table has the suffix "CREATE TABLE IF NOT EXIST uts....", which would be the safer option.

Every column in every table is defined with "NOT NULL." Why? Presumably because of the subsequent HTML output?!

Numeric data types are usually initialized with an incorrect default (... DEFAULT '0')

More or less everything in the database is processed using single-record processing. Set-based operations, especially for INSERTS, UPDATES, etc., would be much more efficient.

[Okay, that's high-level criticism. The guys did a great job 🙂]

Otherwise: I hope your admin key is secure. The site is easily accessible. Could you guess the key using a dictionary?

I haven't checked whether it's an Apache or something else server, but maybe you (@snowguy) are securing the admin pages with an http login (htpasswd)?

Saves you the trouble and time fixing it....

Saves you the trouble and time fixing it....

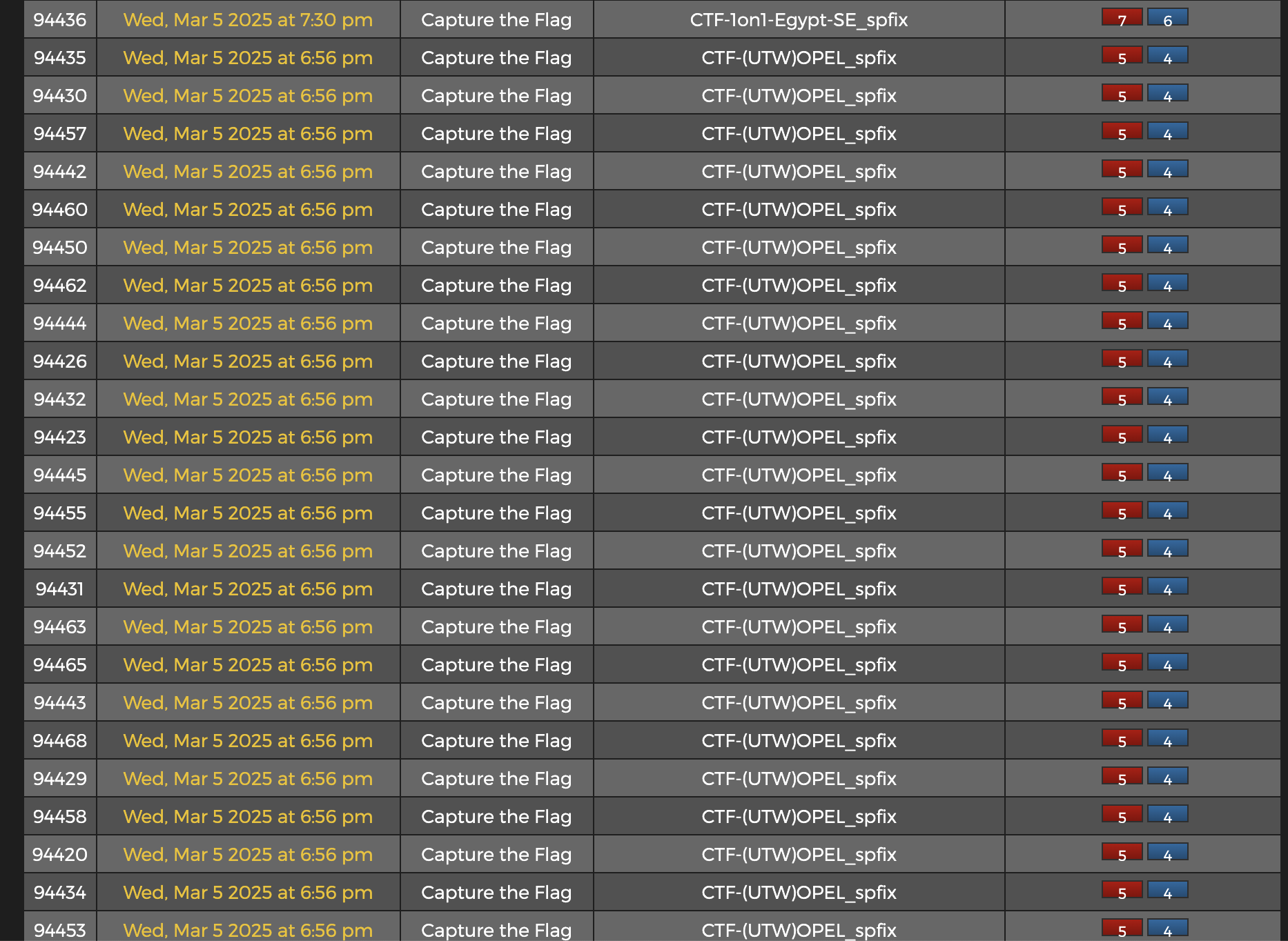

I have stopped the importing again for now. Will look into the issue first thing tomorrow.

I have stopped the importing again for now. Will look into the issue first thing tomorrow.